Cloud Resume Challenge - AWS Edition

Intro

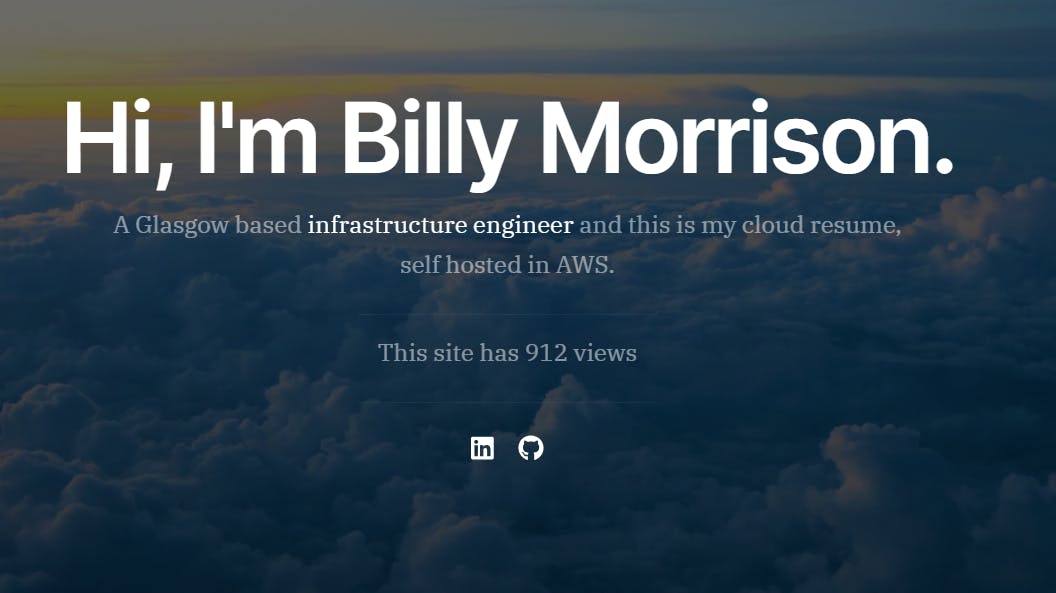

I was looking for something to get hands-on with AWS and Terraform, coming from an Azure background I wanted a project that would cover some of the key concepts of AWS and allow me to get a real understanding of the workflow that goes on when using it. The Cloud Resume Challenge does just that, below I will share my thoughts about the project as well as some of the challenges I overcame to get it up and running.

Building The Website

As this project is primarily focused on Cloud technologies I decided to use a HTML / CSS template, there are a lot of really good free templates out there but I decided to go for this one. The website also required a bit of JavaScript to call the API from AWS, the code for which is below.

// fetch api data for the view function

const counter = document.querySelector(".view-counter");

async function updateCounter() {

let response = await fetch("AWS-URL");

let data = await response.json();

counter.innerHTML = ` This site has ${data} views `;

}

updateCounter();

After getting the code written I then setup an S3 bucket in AWS to host the files for my front-end as well deployed a CloudFront distribution which is a CDN in AWS that allows me to point a domain name that I purchased on Google Domains.

As part of getting CloudFront setup with domain name I also had to get a SSL certificate for the domain, this would enable me to allow only HTTPS traffic to my site, this seemed easy at first but took some troubleshooting...

As I had the domain on Google Domains I had to configure the CNAME record for the site, however the formatting was not correct between the platforms, after some time spent I realised that when copying the CNAME from AWS it included the domain name at the end, however Google Domains was also adding this automatically so it was showing twice e.g. {CNAME}.bm27.xyz.bm27.xyz. Once I realised this and removed the second (unneeded part) of the CNAME it worked and the SSL cert verified on AWS Certificate Manager, which meant I could view the site live.

DynamoDB

Finally after dealing with the troubleshooting and getting the front end mostly setup I then setup the DynamoDB table. The purpose of this table is simple - to store the view count from my earlier created JavaScript function, which gets called by an API (foreshadowing). DynamoDB is a serverless NoSQL database that allowed me to easily setup a table in a few clicks, the table only needed one list item to store the number of views, seen below.

Setting Up The Back-End / API

The back-end API for the website uses Lambda which is a serverless compute platform in AWS, I had never used Lambda before so I was unaware of where even to start when writing this code, however after consulting the course notes it references the Boto3 library which was a massive help in getting this up and running.

Using the Boto3 documentation and some stack overflow checking I ended up at the below code. Walking through the code, it first looks for the correct resource, in this case the DynamoDB table named "cloudresume" (which we created earlier). It then goes through a short function to get the item from the key 'id', after this it then stores the data in the views variable and increments it, then it simply returns the views.

import json

import boto3

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('cloudresume')

def lambda_handler(event, context):

# Gets the list item id from the table

response = table.get_item(Key={

'id':'0'

})

# Sets the views variable as the views item

views = response['Item']['views']

views = views + 1

print(views)

# Stores the additional view in the DB

response = table.put_item(Item={

'id':'0',

'views': views

})

return views

After getting this setup the shell of the project/challenge was complete and it allowed me to show the number of views on the landing page of my site.

Terraform IaC

Terraform was something that I was familiar with have spend some time with in Azure, however as this is built in AWS I was not fully aware of the syntax ect needed to create the infrastructure needed. Overall Terraform is great and as it a multi-cloud tool the switch over was not too painful.

As I wanted to get a good understanding of Terraform in AWS I went ahead and setup the IaC for the whole infrastructure as I wanted to get more hands on with Terraform. The below is everything that I setup.

S3 Bucket

CloudFront

DynamoDB

Lambda Function

While setting up the IaC I found this cool function to upload all files in a given folder to an S3 bucket, it allowed me to fully automate the infrastructure to avoid manually uploading the file.

resource "aws_s3_object" "add_source_files" {

bucket = aws_s3_bucket.web_bucket.id

for_each = fileset("${path.module}./website/", "**/*.*")

key = each.value

source = "${path.module}./website/${each.value}"

}

CI/CD

The CI/CD for this project was setup using GitHub Actions as it allowed me to easily integrate it into my workflow. It consists of two workflows that run when I push an update to my main branch, one workflow deploys/updates the lambda function and the other makes any needed changes to the website.

The big thing when setting up the workflows was to make sure that no AWS keys are in the .yml files, to ensure this doesn't happen GitHub has a neat feature to store the keys in a "Secrets and variables" vault which can then be used when needed in the code, which can be seen below.

name: Deploy Lambda

on:

push:

branches: [ main ]

jobs:

deploy:

name: deploy lambda function

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: AWS Lambda Deploy

uses: appleboy/lambda-action@v0.1.9

with:

aws_access_key_id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws_secret_access_key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws_region: 'eu-west-2'

function_name: terraform-func

zip_file: 'aws-infra/packedlamda.zip'

The YAML files are fairly small as they both use libraries, the front-end setup was straight forward as it uses a widely known library however I took quite some time to find the back-end library but I'm glad I did as it improves the workflow drastically.

Conclusion

Overall I am very happy with what I got out of doing this project, I got a better understanding of serverless options in AWS as well as how a DevOps pipeline functions with Terraform and GitHub Actions. I also learned a lot about AWS and got a hands on understanding of how the key concepts of the cloud don't differ all too much between platforms. Which is nice too see as it makes the skills I'm learning more applicable and transferable to current tech stacks and any new ones that inevitably appear in the future.

If you made it this far thanks so much for reading :) and if you somehow got here without looking at my GitHub or website you can view them below.